Data engineering tools

Data engineering tools

Are you navigating the complexities of managing large-scale data operations? This challenge is becoming increasingly common as businesses accumulate vast amounts of data.

In this article, we will delve into the essential tools that can transform your data engineering efforts, addressing the hurdles posed by data volume and complexity while highlighting the importance of streamlined processes.

By the end of this article, you will discover the most popular data engineering tools, understand how they enhance data processing, and learn what factors to consider when selecting the right solutions for your organization.

Best Data Engineering Tools for Large-Scale Operations

When managing large-scale data operations, choosing the right tools is essential to streamline workflows, maintain data quality, and ensure efficient processing. Here are some top tools suited for handling large-scale operations:

Apache Spark: A powerful choice for businesses managing massive datasets.

Distributed computing capabilities for rapid data processing across clusters

Enables real-time data processing and complex analytics

Reduces time and cost for intensive data tasks

Amazon Redshift: Ideal for data storage and fast access.

Scalable data warehouse structure suited for large datasets

Supports complex queries with high performance

Integrates seamlessly with other AWS services, enabling data-driven decisions with ease

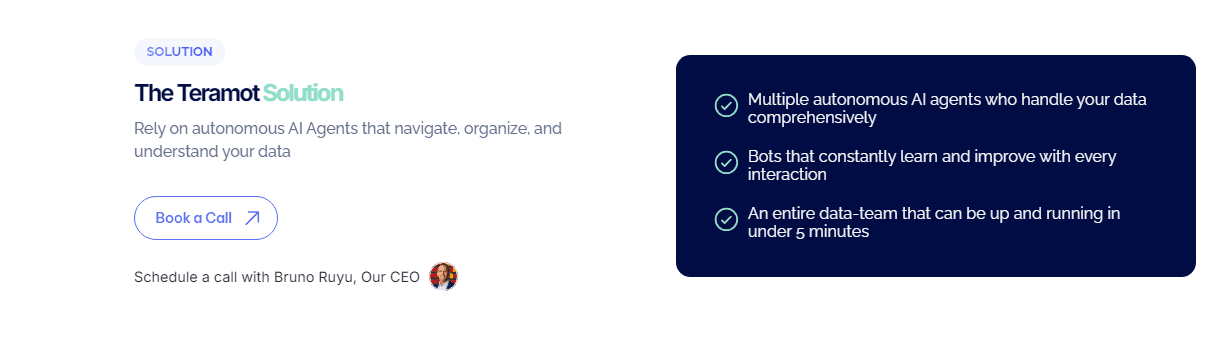

Teramot: A comprehensive platform for agility, flexibility, and privacy.

Up-to-date insights from easy integration with diverse data sources

Enhances operational efficiency through automation, minimizing manual efforts

Provides scalable solutions that grow with your business

Recomended lecture: What is Engineering Data Management?

How do data engineering tools streamline data processing?

At Teramot, we know that efficient data processing is key to turning raw information into valuable insights.

Our data engineering tools automate everything from data collection and transformation to integration, so you can handle large datasets without the need for time-consuming manual processes.

We bring together disparate data sources into a unified system, letting you focus on the insights rather than the process itself.

We also prioritize data quality and consistency by building validation and transformation checks into our tools. These checks keep errors and inconsistencies to a minimum, so your data is always reliable. Here’s how we make that happen:

Automated Data Cleaning: Our tools catch errors, fill in gaps, and streamline your datasets with minimal oversight.

Data Transformation Templates: Pre-built templates let us convert data to the necessary formats seamlessly.

Scheduled Updates: Regularly refreshed data means you’re always working with the latest insights.

At Teramot, we provide solutions that scale with your business needs. Our focus on flexibility, agility, and privacy ensures that, as your data grows, we’re there with you.

With automation at the heart of our approach, our tools allow your data processes to flow smoothly and securely, empowering you to make decisions confidently and quickly.

What Are the Best ETL Tools for Data Engineers?

Selecting the right ETL (Extract, Transform, Load) tools is crucial for data engineers looking to optimize their data workflows.

One of the standout options is Apache NiFi, known for its intuitive interface and real-time data flow management. It allows engineers to create and modify data pipelines easily, making adjustments on the fly as data needs change.

Another strong contender is Talend, which offers a wide range of data integration solutions.

Talend is particularly valued for its robust transformation capabilities and user-friendly design, making it accessible even for teams with varying levels of technical expertise.

When considering ETL tools, focus on these essential features:

Scalability: Ensure the tool can handle growing data volumes.

Integration Capabilities: Look for support for various data sources, including cloud and on-premises.

User-Friendly Interface: A visual interface can simplify the workflow and enhance collaboration.

Informatica PowerCenter remains a favorite in the industry for its reliability in managing large datasets and its strong data governance features.

For organizations using Amazon Web Services, AWS Glue is an excellent choice as a serverless ETL service that integrates seamlessly with other AWS tools.

By choosing the right ETL tools, data engineers can streamline processes and maintain high data quality.

To facilitate this process, Teramot offers robust engineering data management solutions designed to optimize workflows and improve data accessibility.

Ongoing training and support are crucial for a successful implementation. Companies should invest in training programs to equip their teams with the necessary skills to effectively use data management systems.

Continuous monitoring and feedback loops can help refine processes and enhance data quality.

By prioritizing these elements, organizations can ensure that their engineering data management systems not only meet current demands but also adapt to future challenges, driving long-term success with Teramot's expertise.

Here’s what Teramot offers:

Flexibility

Agility

Privacy Easy integration of different data sources

Up-to-date insights for quick decisions

Increased efficiency through automation

Solutions that grow with your business

Whether you run a small startup or a large enterprise, Teramot is here to help you optimize your data processes.

We’re available worldwide, so no matter where you are, we can support your data automation needs.

Book a demo with us today and experience how our solutions can streamline your processes and enhance your decision-making capabilities.

Which Data Engineering Tools Are Ideal for Automating Workflows?

Automating workflows in data engineering is crucial for improving efficiency and reducing manual errors.

Several tools stand out for their ability to simplify and automate various processes, allowing data engineers to focus on more strategic tasks. Here are some of the top choices:

Apache Airflow: This open-source tool is designed for orchestrating complex workflows. With its powerful scheduling capabilities, Apache Airflow enables teams to define, monitor, and manage workflows seamlessly.

The platform’s flexibility allows engineers to build custom automation pipelines tailored to their specific needs.

Prefect: Known for its ease of use, Prefect offers a modern approach to workflow automation. It allows data teams to create data pipelines with Python, making it accessible for engineers who are already familiar with the language.

The tool provides excellent monitoring and error handling, ensuring that workflows run smoothly and efficiently.

Luigi: Developed by Spotify, Luigi is another popular workflow automation tool that simplifies the management of long-running processes. It is particularly effective for building complex data pipelines and ensures that tasks are executed in the correct order.

The visual interface helps data engineers track progress and troubleshoot issues easily.

When selecting a tool for automating workflows, consider the following factors:

Integration: Ensure the tool can easily connect with your existing data sources and systems.

Scalability: Choose a tool that can grow with your data needs, accommodating increased workloads without compromising performance.

Community Support: Tools with active communities offer valuable resources, documentation, and plugins that can enhance functionality.

By leveraging these automation tools, data engineering teams can streamline their processes, reduce manual effort, and improve overall productivity, ultimately leading to more robust data operations.

Recomended lecture: Data Automation Services

What factors should businesses consider when choosing data engineering tools?

When selecting data engineering tools, compatibility with existing systems is one of the most important factors to evaluate.

Integrating new tools smoothly with your current infrastructure avoids disruptions and maximizes the value of past investments.

Businesses should look for solutions that support easy integration with various data sources, enabling seamless data flow and minimizing manual work.

Another key consideration is scalability. Data needs are likely to grow, and the chosen tools should adapt accordingly.

Scalable solutions prevent the need for frequent upgrades, reducing both cost and complexity in the long term.

Ideally, a data engineering tool should support growth in data volume, processing power, and complexity without compromising on performance.

Lastly, businesses must prioritize data security and compliance. With increasing data regulations, it’s essential to ensure tools meet industry standards for data protection. Key features to look for include:

Encryption: Both in transit and at rest to protect sensitive data.

Access Controls: Role-based access that aligns with internal policies.

Compliance Certification: Verifications for standards like GDPR, HIPAA, or ISO, depending on your industry.

Choosing the right data engineering tool means balancing these factors to ensure that the tool aligns with both current operations and future goals.

Frequently Asked Questions

What is the best tool for data engineering?

The best tool for data engineering often depends on specific use cases, but Apache Spark stands out due to its speed and versatility, capable of handling large-scale data processing and analytics.

How do data engineers use Python?

Data engineers use Python for its extensive libraries like Pandas and NumPy, which facilitate data manipulation and analysis. Approximately 40% of data engineers leverage Python for ETL processes, automation, and building data pipelines, making it an essential tool in their toolkit.

Are ETL tools essential for data engineering?

Yes, ETL (Extract, Transform, Load) tools are crucial for data engineering, as they streamline the process of moving data between systems. A study shows that organizations using ETL tools can improve their data processing efficiency by over 30%, allowing for better data management and analytics.

What role do data engineering tools play in automating large datasets?

Data engineering tools automate the handling of large datasets by facilitating data extraction, transformation, and loading processes. Automation reduces the time and effort needed to manage data, enabling data engineers to focus on analysis and insights.